IT professionals: If you need to select a hyperconverged infrastructure solution for your organization’s edge sites, do you automatically choose something with high specifications, or take a moment to stop and analyze your actual compute, storage and processing requirements?

Why? Because there’s a growing trend among IT professionals where many are deploying all flash HCI solutions that are 10 to 20 times greater than their organization’s actual needs, all in the hope that it will solve their enterprise application’s performance bottleneck, or help them prepare for the future.

But this isn’t always the case. Not only does this often fail to fix their performance problems, but it can also create issues with budget too. Here are a few things to keep in mind.

All Flash Won’t Always Improve Performance at the Edge

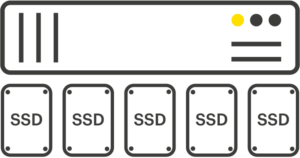

Let’s say an organization is deploying a three-server all flash solution with a potential 5,000 to 10,000 IOPS. They have just a handful of staff at each edge location, are running basic apps with minimal compute needs, and have an IOPS requirement of 500 to 1,000.

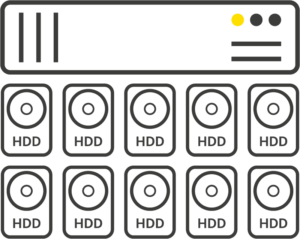

In a scenario like this, the company’s system would certainly be over-provisioned by 10 to 20 times more than their actual needs. Instead of deploying a solution that they only use 5 to 10% of, they’d be much better off using a powered off-the-shelf 2-node solution.

By doing so, they’d more than meet their performance needs, and achieve the levels of redundancy needed to keep their precious data safe, with the option of encrypting the data as well.

Another common misconception is that all flash storage solutions will solve issues with enterprise application performance.

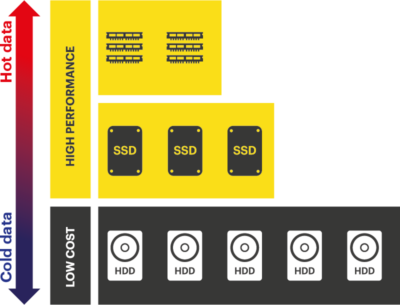

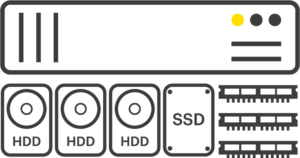

When encountering performance bottlenecks like these, defaulting to an all flash array is unlikely to be the solution you need. Often issues like these can be eliminated by designing an IT infrastructure that cleverly uses different storage tiers to best effect. By leveraging caching features that make use of memory caching and a small flash drive for ‘hot’ frequently-accessed data, and magnetic disks for ‘cold’ less used data, most requirements for edge computing environments will be met.

When encountering performance bottlenecks like these, defaulting to an all flash array is unlikely to be the solution you need. Often issues like these can be eliminated by designing an IT infrastructure that cleverly uses different storage tiers to best effect. By leveraging caching features that make use of memory caching and a small flash drive for ‘hot’ frequently-accessed data, and magnetic disks for ‘cold’ less used data, most requirements for edge computing environments will be met.

A cluster using magnetic disks could be updated to take advantage of memory caching with a click of a button. This would allow users to receive blazing fast reads and a significant improvement in writes.

The Real Cost of an All Flash HCI vs Alternatives

| Disk only | All flash | Hybrid | |

|

|

|

|

| Latency | High | Low | Low |

| Capacity | High | Low / Medium | High |

| Performance | Low / Medium | High | High |

| Cost per GB | $ | $$$ | $$ |

| Cost per IOP | $$$ | $ | $ |

In cases where an organization’s performance and compute needs are low, all flash enterprise storage solutions can cost up to 10 times more than those based solely on HDDs.

This may come as a shock for some. All flash storage solutions are commonly known to consume less power than magnetic disks. But this ultimately depends on how many disks you’re using and how you’re using them.

Properly understanding your storage performance needs and configuring a solution to those precise requirements will negate the needs for a costly all flash solution.

Furthermore, enterprise-grade magnetic disks offer similar mean time between failure (MTBF) figures as SSDs over a similar time period, making them just as reliable. Suggesting that the additional cost of SSDs is worth it for reliability alone does not stack up.

So, Is All Flash Better For Edge Locations?

Well, that really depends on a company’s individual circumstances and site needs.

While performing well at the edge in some instances, in cases where an organization has low compute needs, deploying a hyperconverged infrastructure solution using a cost-effective combination of storage types rather than all flash would work better. The same can be said for fixing application performance issues – other solutions may fix the problem in a more cost-effective way than an all flash array.

A leader at the edge for over 15 years, StorMagic SvSAN is flexible enough to adapt to your business needs, whether you require a solution inside an office space, or on an oil rig in the middle of the Atlantic. We’re well-versed in deploying a range of solutions that can help to reduce your storage costs, without compromising the availability of, and access to, your precious data.

To learn more about how SvSAN can be configured to leverage storage tiers for maximum performance and cost-effectiveness, check out SvSAN’s Predictive Storage Caching features. Thanks to SvSAN not requiring any expensive hardware, and its ability to run on any x86 server and any storage type, it can operate at a fraction of the cost of all flash.

We understand that finding the best solution for your business can be tricky – sometimes, even after doing your research, you’re left with more questions than answers. If this sounds like you, try enlisting the help of one of StorMagic’s team of experts at [email protected]. With experience helping organizations around the world with edge site needs, they’re well versed in walking you through a solution that works best for your organization.