Industrial IoT is a natural extension of historical process control systems that have dominated factory floors for decades. In recent years, the increase in sensors and devices to monitor machinery and outputs has meant manufacturers are now moving from monitoring individual things, to monitoring entire processes from one screen. The result is the invention of industrial IoT as a catch-all phrase to describe this change.

Before industrial IoT – many screens, many alerts

In the past, each machine or system had its own monitoring and alerting function and in order to monitor a process across stages, multiple systems with different interfaces and alerting mechanisms were required. Controlling a process literally meant walking around from one machine to the next to watch for warning lights, reading and responding to meters in the “red zone”, and listening for alarm bells.

The growth of industrial IoT and the proliferation of sensors and endpoints on the factory floor has only increased over time, and so the need to consolidate their management and monitoring has become more and more pressing.

The growth of industrial IoT and the proliferation of sensors and endpoints on the factory floor has only increased over time, and so the need to consolidate their management and monitoring has become more and more pressing.

Modern monitoring and process control applications are built on traditional IT systems, using industry-standard operating systems, hypervisors, and development tools. These applications collect, filter, clean, transform, consolidate, and analyze data from multiple systems in real-time, to deliver a monitoring and process-control dashboard.

The consolidation of data and alerts into a dashboard allows for greater efficiency, improved quality, and enhanced reporting, but it also drives the need for high availability from the supporting infrastructure. The dashboard that monitors and possibly controls all systems in a process can’t go down. Fortunately, the cost of enabling highly available IT infrastructure has dramatically decreased.

Leveraging Branch Office IT Technology on the Factory Floor

Much information technology innovation has been adopted in the branch offices of large enterprises. Server virtualization enabled the consolidation of multiple applications onto fewer servers, reducing the IT footprint. Centralized monitoring of remote site IT infrastructure reduced or eliminated the need for on-premises IT staff in remote locations. Clustering and automated failover tools enabled non-disruptive maintenance and upgrades of both software and hardware. At the same time, software-defined storage and the increased storage capacity and performance within industry-standard servers eliminated expensive external disk arrays and storage area networks. These were replaced with internal hard disk drives, solid-state drives (SSDs), and flash memory together with data mirroring, for high availability and data sharing – all of which are required to enable automated failover.

Branch offices of large enterprises have been earlier adopters of these technologies, because each of the applications they used ran on traditional datacenter-compatible infrastructure. Manufacturers, however, were slower to adopt, because the industrial control applications were often built on proprietary systems with industry-specific interfaces. Even within a component of a process, the cost of high availability was often prohibitive, as the tools to enable non-stop monitoring and control had to be customized for each system. That is changing now, as modern industrial IoT process control dashboards collect, filter, cleanse, and transform data from multiple disparate systems into a common language and data format that can run on industry-standard hardware.

Branch offices of large enterprises have been earlier adopters of these technologies, because each of the applications they used ran on traditional datacenter-compatible infrastructure. Manufacturers, however, were slower to adopt, because the industrial control applications were often built on proprietary systems with industry-specific interfaces. Even within a component of a process, the cost of high availability was often prohibitive, as the tools to enable non-stop monitoring and control had to be customized for each system. That is changing now, as modern industrial IoT process control dashboards collect, filter, cleanse, and transform data from multiple disparate systems into a common language and data format that can run on industry-standard hardware.

The Need for On-Premises IoT Monitoring

It is possible to monitor and control the processes running at remote locations from a central site using this newer approach. However, the reality for geographically distributed organizations, especially those that are operating in low-labor-cost, non-urban locations, or developing countries (where much of our manufacturing takes place), is that bandwidth reliability, performance, and affordability are inadequate to support real-time decision-making from a centralized location. These systems need to run on-premises in factories and should operate on highly available infrastructure. When equipment is operating outside good quality or safety tolerances, operators need to take immediate action.

Even if the application is only a monitoring dashboard and not a control system, the infrastructure should be highly available. Without IoT device monitoring and alerting, the supplier may fail to detect or prevent a product defect, or even workplace accident, that later could result in a recall, injury to customers or employees, costly lawsuits, and irreversible damage to the brand’s reputation. Moreover, without continuous monitoring, the evidence needed to defend a liability claim may be lost.

The Benefits of IoT in Manufacturing:

A rapidly growing market, industrial IoT solutions are essential for manufacturers both now and in the future. Some of the most notable developments in the sector involve data monetization (a revenue-generating strategy) and even commercial models like Equipment as a Service (EaaS).

But how do IoT solutions for manufacturing help the organizations leveraging them? Here are some of the benefits:

Increasing efficiencies and line optimization

IoT gives manufacturers the ability to automate processes using robotics and machines, optimizing their operating efficiency. This, in addition to the IoT solution’s ability to communicate directly from network components to employees, helps boost factory productivity and allows a shorter time to market.

The rise of Industry 5.0 has seen more employees working alongside robots. Using more robots and machines has helped manufacturing organizations identify inefficiencies in the product cycle far sooner than before. Today it’s even easier and faster for manufacturing organizations to review factors like overall availability, machine capabilities, and current capacity across multiple plants – so identifying the most efficient place to produce specific products can be achieved quickly with little to no hassle.

With the introduction of edge computing to complement this technology, industrial IoT solutions allow organizations to monitor performance from the most remote locations, while making faster data-driven decisions, and identifying disruptions and market fluctuation much earlier with no latency.

Reducing errors and increasing workplace safety

Workplace accidents can happen in any industry, and manufacturing is certainly no stranger to them. With many industrial facilities utilizing heavy, potentially dangerous machinery, and an average of 5,190 fatal work injuries taking place in 2021 in the US alone, it’s easy to understand how critical making improvements to workplace safety is to human life.

Leading this movement are “smart manufacturing” and “smart security”. These technologies get all IIoT sensors to work together to provide real-time monitoring alerts that allow for the early detection and response to any potential safety hazards on-premises. Overall, this has cut workplace-related illnesses down by 50% in construction, an industry notorious for having one of the highest accident rates.

Predictive maintenance

In the past, much of machine maintenance was reactive rather than proactive. This created a fair amount of downtime – over 15 hours per week in fact, which cost automotive manufacturers as much as $22,000 per minute of unplanned downtime, or $50 billion a year. Using industrial IoT monitoring, organizations are able to plan ahead, giving them more time to order the resources required for the project in advance, understand project costs ahead of time, and even eliminate blows to their product quota by moving production to other areas of the factory if need be.

Recommendations

It is critical for industrial organizations to understand uptime and response-time requirements and the different ways in which industrial IoT solutions’ data can be collected, analyzed, and used. Technology and budget constraints have a major impact on IT architecture decisions, but what is clear is that there is not one architectural approach that meets all industrial IoT requirements. IT and application architects should plan for and implement:

- High availability systems in each remote location to support distributed, real-time decision-making and corrective actions to ensure quality and safety

- Centralized systems to support corporate-wide quality initiatives, compliance reporting, supply-chain management, and improved analytics

- Centralized systems to incorporate their suppliers into a corporate-wide dashboard supply chain dashboard to avoid production delays and product recalls

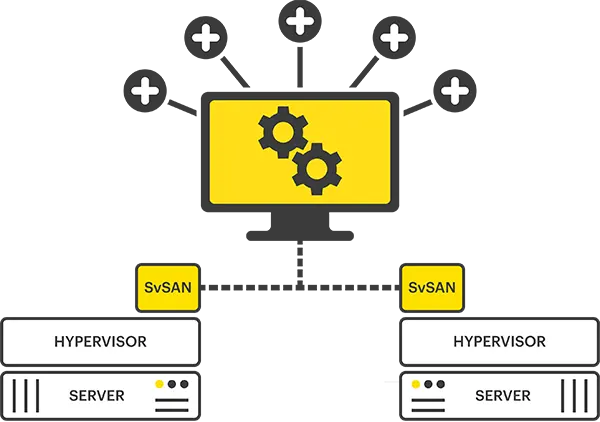

StorMagic helps manufacturing organizations in creating highly available, centralized systems for industrial IoT applications. StorMagic SvSAN is a hyperconverged storage solution that provides high availability with clusters of server nodes that can be located in manufacturing facilities and offices, providing the uptime and availability necessary for business-critical processes and data. Server nodes running SvSAN can be separated across buildings and entire sites for added resiliency to ensure production lines keep moving, productivity remains high and revenue isn’t lost. For more information on how SvSAN helps manufacturing organizations, explore case studies, resources and further reading on the manufacturing use case page.