StorMagic SvSAN: Simple hyperconverged storage

StorMagic SvSAN is a virtual SAN – a software-defined solution designed to run on two or more servers and deliver highly available hyperconverged storage.

SvSAN simplifies your IT infrastructure. It eliminates the need for a physical SAN by virtualizing the internal compute and storage of any x86 server and presenting it via a hypervisor as shared storage.

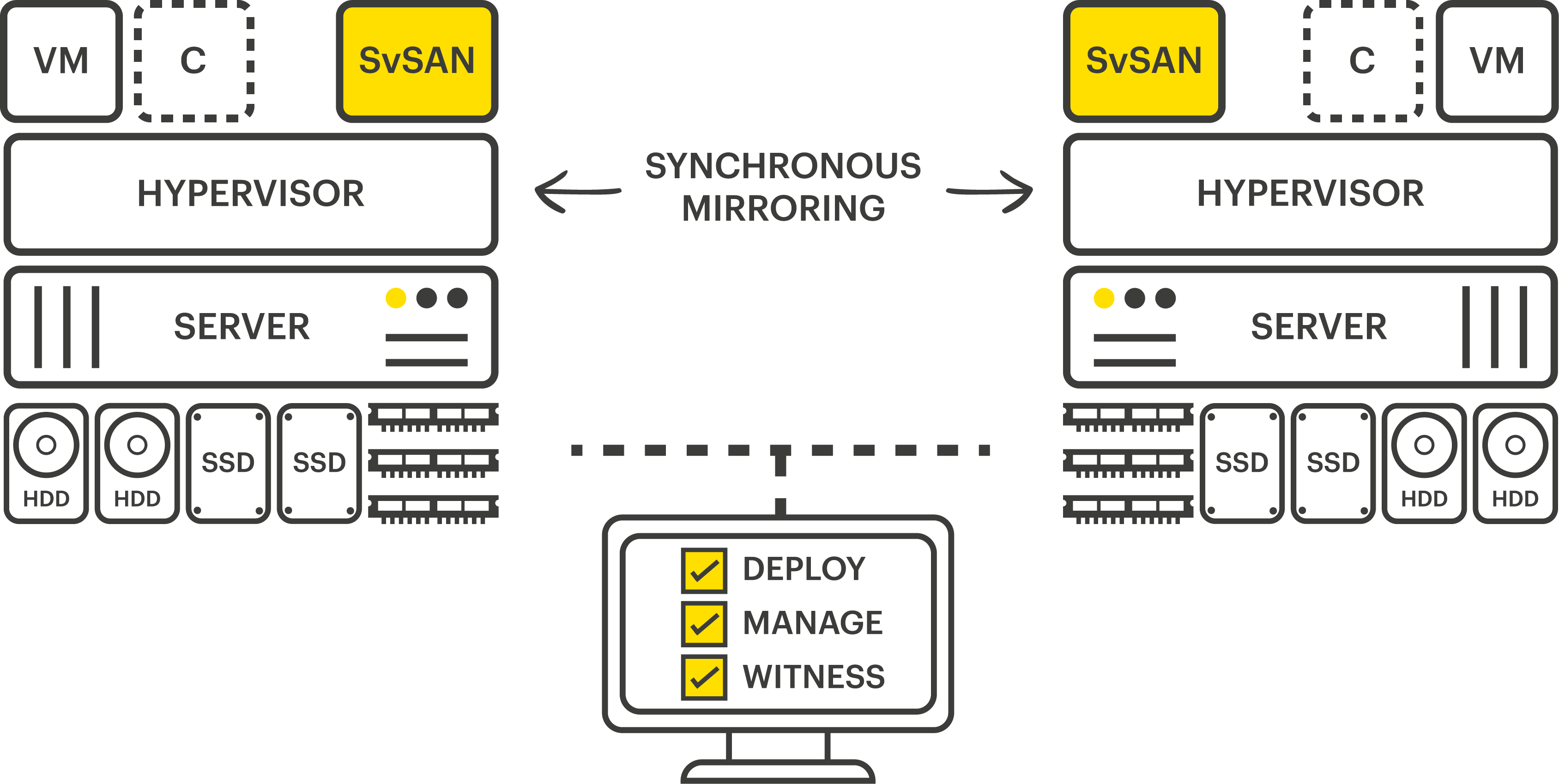

SvSAN requires only two servers, unlike other virtual SANs. A typical 2-node SvSAN configuration, with a centralized management interface and witness, is shown below.

This SvSAN data sheet is broken down into four sections, covering SvSAN’s features, its requirements, hardware and software compatibility and finally support levels.

For a greater technical examination of StorMagic SvSAN, including deployment options and use cases, please refer to the SvSAN Technical Overview white paper.

SvSAN’s Features

StorMagic SvSAN has a range of features enabling the storage architect to get the most out of their infrastructure. These features are detailed in the table at the bottom of this page.

All of the features necessary for highly available shared storage are included in an SvSAN license, including the witness. There are also two performance and security-enhancing add-ons available, which are SvSAN’s caching and data encryption features. Many of these features are covered in more detail within their own white papers. View the full range on the StorMagic website.

SvSAN provides highly available hyperconverged storage on just two nodes using a lightweight witness. The SvSAN witness prevents split-brain scenarios from occurring in 2-node SvSAN clusters by regularly checking the state of each node in the cluster. The only data passing between the witness and the SvSAN nodes is the ‘heartbeat’ – the witness is not in the data path. This allows it to tolerate significant latency and low bandwidth. For more details, see the SvSAN Witness data sheet here.

SvSAN’s caching features include write-back and read-ahead caching, as well as data pinning. Collectively these features are known as Predictive Storage Caching and can dramatically improve an organization’s storage performance without requiring significant investment in new hardware.

SvSAN’s data encryption feature enables organizations to encrypt the data being mirrored by SvSAN. This allows vulnerable locations and the data they hold to be protected. It is FIPS 140-2 compliant, eliminates the need for expensive OS or hypervisor-level solutions, and is compatible with any KMS that uses KMIP, including StorMagic’s own key manager, SvKMS.

StorMagic offers a no-cost Container Storage Interface (CSI) driver for container use with SvSAN. This provides persistent, highly available storage for Kubernetes-orchestrated container deployments. SvSAN supports VMs, containers, or both on the same 2-node cluster. For full details of the CSI driver’s system requirements and compatibility, and more information about this deployment option, refer to the SvSAN Container Storage Interface Driver Data Sheet.

Monitoring and management of SvSAN is carried out by StorMagic Edge Control. This cloud-based web console communicates with all of an organization’s SvSAN clusters via a VM known as the orchestrator, which resides on the same corporate network as the SvSAN clusters. Edge Control simplifies and centralizes SvSAN management, eliminating the need for multiple tools or interfaces. More information about Edge Control including the orchestrator’s system requirements, is available in the Edge Control data sheet.

StorMagic SvSAN is licensed based on the usable VSA storage capacity. License tiers are set at 2TB, 6TB, 12TB, 24TB, 48TB and Unlimited TB.

SvSAN is available as a perpetual or subscription license. After a single payment, perpetual SvSAN licenses can be used forever, with ongoing costs only for support renewal payments. Subscription SvSAN licenses are paid for upfront for a specific time period, one year for example, and then renewed as required. Pricing is based on a single SvSAN license – the number of nodes in the cluster defines how many licenses are required.

For example, two SvSAN licenses are required for a normal 2-node deployment. More information can be found on the SvSAN Pricing webpage.

A free, fully functional evaluation of SvSAN is available to download, enabling organizations to trial and experience the features and benefits of SvSAN, before purchasing.

For more information and to download an evaluation copy, visit: stormagic.com/trial.

System requirements

SvSAN has very low minimum hardware requirements, detailed in the table opposite.

Witness system requirements

The witness sits separately from the SvSAN nodes and therefore has its own minimum requirements in it’s own table opposite. The witness should be installed onto a server separate from the SvSAN VSA.

Hardware and software compatibility

SvSAN works with any x86 server that exists on the VMware vSphere ESXi or Microsoft Hyper-V Hardware Compatibility List (HCL). Furthermore, SvSAN will work with any supported internal server disk storage or JBOD array and supports servers without hardware RAID controllers, due to its software RAID capability.

Hypervisor support

SvSAN supports VMware vSphere, Microsoft Hyper-V and Linux KVM hypervisors. It is installed as a Virtual Storage Appliance (VSA) requiring minimal server resources to provide the shared storage necessary to enable the advanced hypervisor features.

SvSAN is supported on the versions of VMware vSphere ESXi, Microsoft Windows Server/Hyper-V Server, and Linux KVM distributions listed in the table opposite.

If VMware vSphere is chosen as the hypervisor to deploy with SvSAN, StorMagic recommends vSphere Essentials Plus as a minimum to enable high availability.

For further details of SvSAN’s capabilities on KVM hypervisors, please refer to the SvSAN with KVM data sheet.

VMware vCenter support

With the dedicated plugin, SvSAN can be managed directly from VMware vCenter. SvSAN is compatible with the versions of vCenter in the table below.

VMware vCenter versions compatible with SvSAN:

| VMware vCenter version | SvSAN version | |||

| 6.0 | 6.1 | 6.2 | 6.3 | |

| VMware vCenter Server 8.0 & updates | • | |||

| VMware vCenter Server 7.0 & updates | • | • | ||

| VMware vCenter Server 6.7 & updates | • | |||

| VMware vCenter Server 6.5 & updates | • | • | • | |

| VMware vCenter Server 6.0 & updates | • | • | ||

StorMagic SvSAN system requirements:

| Servers | 2 servers required • 3 optional for high availability during offline upgrades, maintenance, etc |

| CPU | 1 x virtual CPU core1 • 2GHz or higher reserved |

| Memory | 1GB RAM2 |

| Disk | 2 x virtual storage devices used by VSA • 1 x 512MB Boot device • 1 x 20GB Journal Disk |

| Network | 1 x 1Gb Ethernet • Multiple interfaces required for resiliency • 10Gb Ethernet is supported • Jumbo frames supported |

2Additional RAM may be required when caching is enabled

Requirements for containers

The following software versions are required to deploy containers on StorMagic SvSAN:

| Software | Version |

|---|---|

| StorMagic SvSAN | From 6.2 Update 5 |

| SvSAN CSI driver | 1.0.0 |

| VMware vSphere | 7.0 |

| Kubernetes | 1.19 or 1.20 |

| VMware Tanzu Kubernetes Grid1 | 1.2 |

SvSAN witness requirements:

| CPU | 1 x virtual CPU core (1 GHz) |

| Memory | 1GB (reserved) |

| Disk | 1GB VMDK |

| Network | 1 x 1Gb Ethernet NIC When using the witness over a WAN link use the following for optimal operation: • Latency of less than 3000ms, this would allow the witness to be located anywhere in the world • 9Kb/s of available network bandwidth between the VSA and witness |

| Operating System | The SvSAN witness can be deployed onto a physical server or virtual machine with the following: • Windows Server 2019 and 2022 (64-bit) • Hyper-V Server 2019 (64-bit) • Raspbian Buster (32-bit) • vCenter Server Appliance (vCSA)1 • StorMagic SvSAN Witness Appliance • Ubuntu 20.04 |

Hypervisors compatible with SvSAN:

| Hypervisor | SvSAN version | ||||

| 6.0 | 6.1 | 6.2 | 6.3 | ||

| VMware | vSphere 8.0 & updates3 | • | |||

| vSphere 7.0 & updates2 | • | • | |||

| vSphere 6.7 & updates | • | ||||

| vSphere 6.5 & updates1 | • | • | • | ||

| vSphere 6.0 & updates | • | • | |||

| Microsoft | Windows Server 2022 | • | |||

| Windows Server 2019 | • | • | |||

| Hyper-V Server 2019 | • | • | |||

| Windows Server 2016 | • | • | |||

| Hyper-V Server 2016 | • | • | |||

| Linux KVM4 | Ubuntu 20.04 | • | • | ||

2vSphere 7.0 compatibility is available from SvSAN 6.2 Update 2 Patch 2 onwards.

3vSphere 8.0 compatibility is available from SvSAN 6.3 Patch 1 onwards.

4Linux KVM compatibility is available for SvSAN 6.2 Update 5 Patch 5 and onwards.

SvSAN Maintenance & Support

SvSAN Maintenance & Support provides organizations with access to StorMagic support resources, including product updates, knowledgebase access and email support with our technical support staff.

Two levels are available. A summary of each is shown in the table below. More information on SvSAN Maintenance & Support can be found at stormagic.com/support.

| Gold Support | Platinum Support | |

| Hours of operation | 8 hours a day1 (Mon – Fri) | 24 hours a day2, (7 days a week) |

| Length of service | 1, 3 or 5 years | 1, 3 or 5 years |

| Product updates | Yes | Yes |

| Product upgrades | Yes | Yes |

| Access method | Email + Telephone (via platinum engagement form on support.stormagic.com) |

|

| Response method | Email + WebEx | Email + Telephone + WebEx |

| Remote support / WebEx | Yes | Yes |

| Maximum number of support administrators per contract | 2 | 4 |

| Response time | 4 hours | 1 hour |

2Global, 24×7 support for Severity 1 – Critical Down & Severity 2 Degraded issues

SvSAN Feature Table

| SvSAN features |

| Synchronous mirroring/high availability • Data is written to two SvSAN VSA nodes to ensure service uptime • Write operations only complete once acknowledged on both SvSAN VSAs • In the event of a failure, applications are failed over to other available resources |

| Stretched/metro cluster support – white paper with more information • Separate nodes geographically to provide an added layer of resiliency • Different racks, separate rooms or buildings, or even across an entire city |

| Volume migration – white paper with more information • Transparently and non-disruptively migrate volumes from one storage location to another • Simple and mirrored volumes can be migrated between storage pools on the same SvSAN VSA node or to another SvSAN VSA node entirely |

| VMware Fault Tolerance feature • SvSAN deployed on VMware vSphere hypervisor enables the usage of VMware’s Fault Tolerance feature on clusters of just two nodes • Fault Tolerance protected VMs see zero downtime or loss of service when one node goes offline • Keep critical applications online and running in the event of a node failure |

| VSA restore (VMware only) • Automates the recovery process of an SvSAN VSA node following a server failure or replacement • SvSAN VSA configuration changes are tracked and stored on another SvSAN VSA in the cluster • Mirror targets are rebuilt and resynchronized, enabling a quick return to optimal service • Simple targets can be automatically recreated, ready for data recovery from backup |

| VMware vSphere Storage API (VAAI) support (VMware only) • Accelerating VMware I/O operations by offloading them to SvSAN • Supports the Write Same, Atomic Test & Set (ATS) and UNMAP primitives |

| Centralized monitoring and management • Monitor and manage SvSAN from a single location with multiple options including WebGUI • Seamless integration with vCenter Web Client enabling alerts to be forwarded and captured on one screen • Email alert notifications using SMTP, and SNMP integration with support for v2 and v3 |

| Witness – white paper with more information • Acts as a quorum or tiebreaker and assists cluster leadership elections to prevent “split-brain” • Hundreds of locations can share a single witness and it tolerates low bandwidth, high latency WAN links • Supported configurations include local witness, remote shared witness or no witness |

| I/O performance statistics • Provides granular, historical I/O transaction, throughput and latency statistics for each volume • Simple, intuitive graphical presentation with minimum, maximum, and average values for daily, monthly, yearly time periods • Data can be exported to CSV for further analysis |

| Multiple VSA GUI deployment and upgrade • Deploy and upgrade VSAs through a single wizard either immediately or in a staged approach for out-of-hours activity • SvSAN handles dependencies and performs a health check ensuring there is no impact to environments |

| PowerShell script generation • Deployments over many locations can be handled by generating a custom PowerShell script |

| Cluster-aware upgrades • Simplifies the process of upgrading multiple VSAs with full control over date/time and how many/which clusters to upgrade • Upgrades can be carried out on one or more SvSAN VSAs simultaneously, ensuring storage remains online throughout • Automated process stages the firmware, checks cluster health, then proceeds with upgrading each VSA in turn |

| Software RAID • Install SvSAN on servers without hardware RAID controllers, such as the Lenovo SE350 and HPE Edgeline EL8000 • Configure as RAID 0 (striping) or RAID 10 as required |

| Container Storage Interface (CSI) Driver – white paper with more information • Easily deploy containers with persistent, highly available storage • No additional costs to deploy containers in an SvSAN cluster • One platform for VMs, containers, or both |

| Additional add-on features available |

| Predictive read ahead caching (SSD and memory) – white paper with more information • Beneficial to sequential read workloads – populates memory with data prior to being requested • Boosts performance by reducing I/O requests going to disk, instead serving data from low latency memory |

| Write back caching (SSD) – white paper with more information • Utilizes SSDs to improve the performance of all write operations by lowering latencies and increasing the effective IOPS, resulting in faster response times, especially for random write workloads • All write I/O’s are directed to the SSD allowing completion to be immediately acknowledged back to the server, at a later time the data is written from SSD to the hard disk |

| Data pinning – white paper with more information • Allows data to permanently reside in memory, ensuring that data is always available in the highest performing, lowest latency cache tier, useful for frequently repeated operations such as booting virtual machines • Intelligent caching algorithms identify ‘hot’ and ‘cold’ data, elevating the ‘hot’ data to the highest performing, lowest latency storage tier (SSD or memory) |

| Data encryption – white paper with more information • Utilizes a FIPS 140-2 compliant algorithm (XTS-AES-256) to deliver encryption to all data handled by SvSAN or just selected volumes • Allows secure erasure and re-key • Compatible with any KMIP-compliant key management system including StorMagic SvKMS |